Accuracy in AI Lab

Accuracy in AI Lab

- The top row is the accuracy score for your most recent model in AI Lab. See below for more information on how accuracy is calculated.

- You can also view previous models that you trained in AI Lab. These can help you notice patterns and remember which features led to the most accurate models.

- Click the Details button to view more information about how your model did

How is Accuracy Calculated?

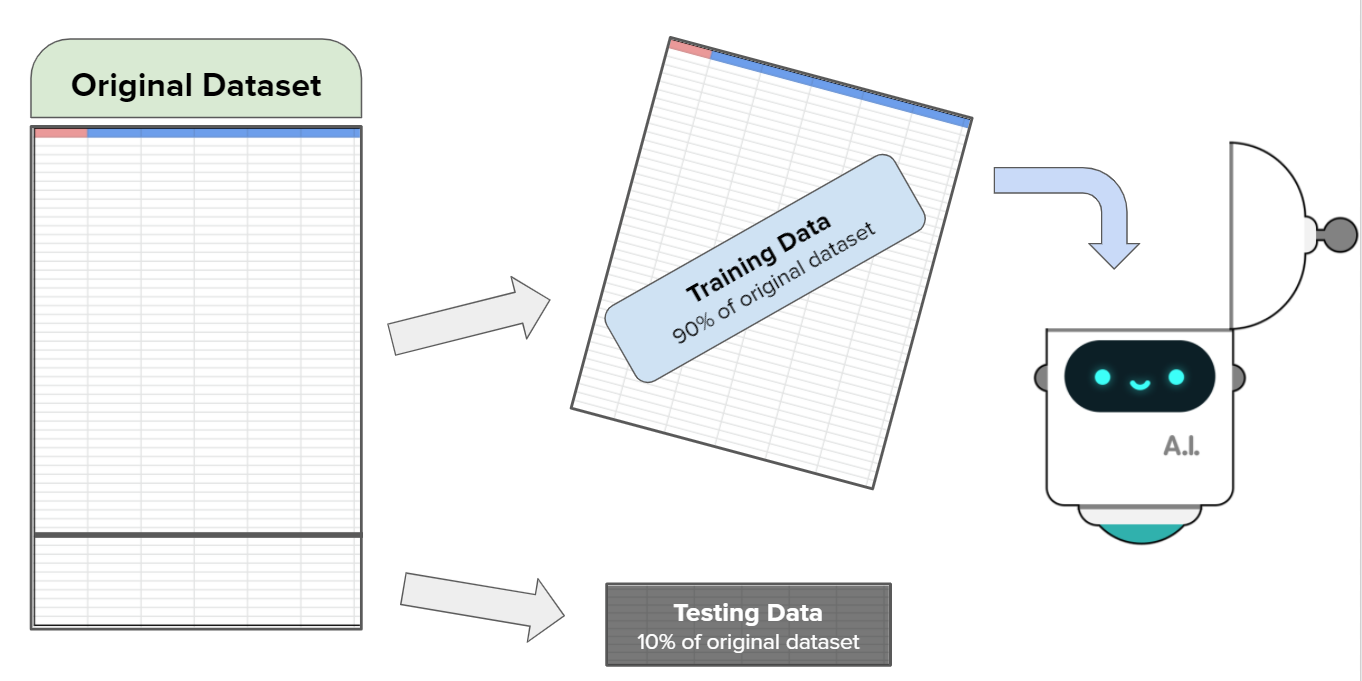

After you've selected your labels and features, the data is automatically split into two sections: the Training Data and the Testing Data.

The Training Data is what AI Bot uses to look for patterns to help it make decisions. Since AI Bot needs lots of data to make decisions, it uses 90% of the original dataset to train with. The Testing Data is kept hidden from AI Bot until it's all done training.

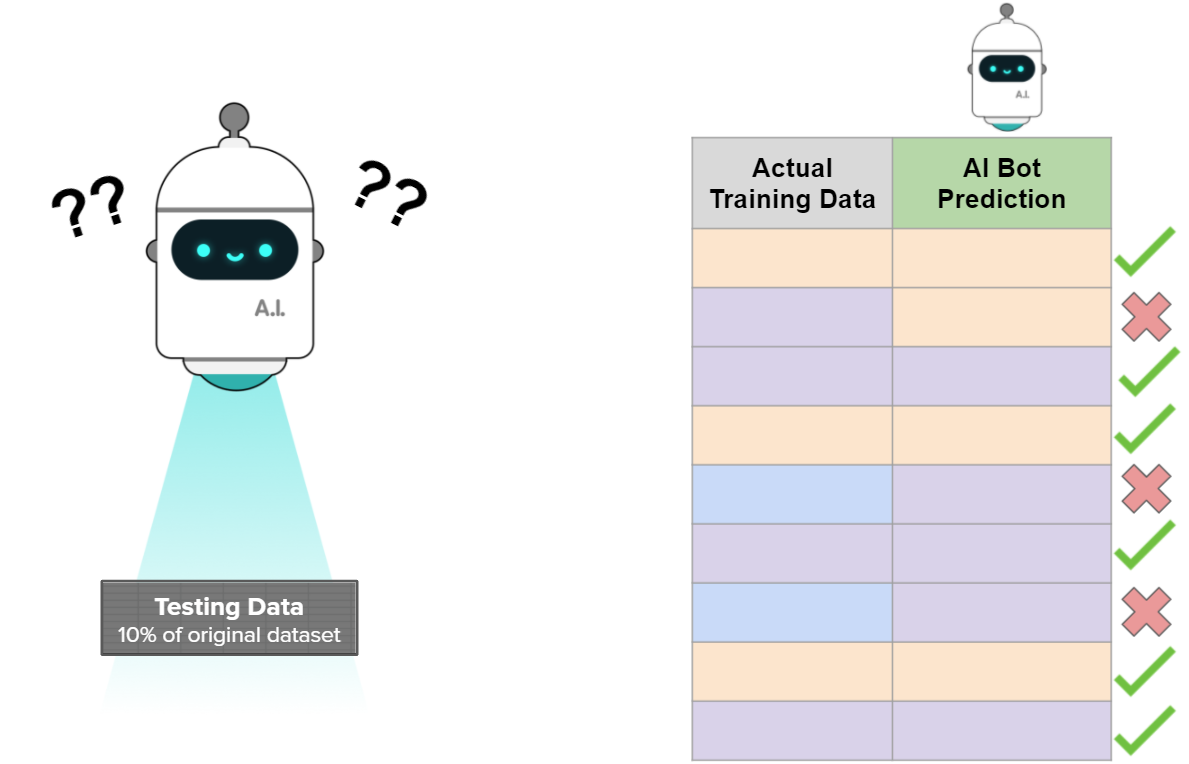

Once AI Bot is done training, it looks at the Testing Data so it can compare its predictions with the original data. This is like studying for a test by looking at practice questions where you know the answers: you try the questions yourself, then you check your answers to see how well you did.

This is how we get our accuracy score - it's how well AI Bot's predictions did when we compare them to the data in the original dataset. If AI Bot got most of the answers correct, then we can be confident that AI Bot found the patterns that match with our original data.

How Important is Accuracy?

Accuracy can do a good job of checking if your machine learning model was successful at finding patterns in your data, but this doesn't always mean your model is ready to start solving problems in the real world. A high accuracy score doesn't mean your model will do a good job in the real world with real users - it just means it did a good job with the data you already had. If there were problems with your data, there will still be problems with your model.

Video

(Opens YouTube in new tab)

Found a bug in the documentation? Let us know at documentation@code.org